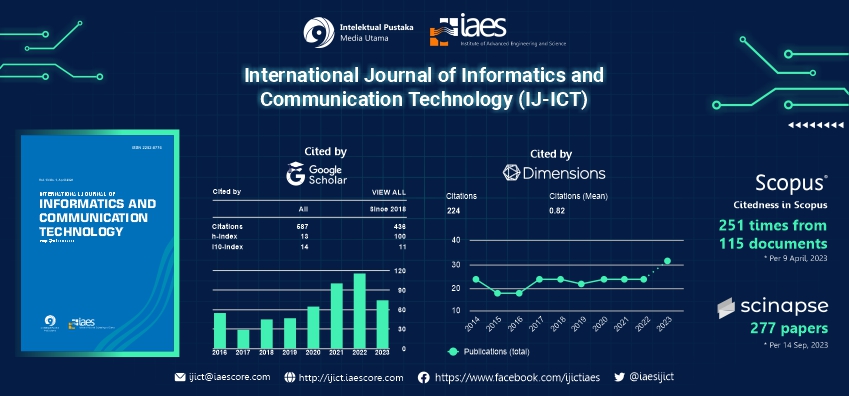

The International Journal of Informatics and Communication Technology (IJ-ICT), p-ISSN 2252-8776, e-ISSN 2722-2616, is a common platform for publishing quality research papers as well as other intellectual outputs. This journal is aimed at promoting the dissemination of scientific knowledge and technology in the information and communication technology areas in front of an international audience of the scientific community, to encourage the progress and innovation of technology for human life, and also to be the best platform for the proliferation of ideas and thoughts for all scientists, regardless of their locations or nationalities. The IJ-ICT covers all areas of informatics and communication technology (ICT) and focuses on integrating hardware and software solutions for storage, retrieval, sharing, manipulation management, analysis, visualization, interpretation, and their applications for human services programs and practices. It publishes refereed original research articles and technical notes. It is designed to serve researchers, developers, managers, strategic planners, graduate students, and others interested in state-of-the-art research activities in ICT.

This journal is published by the Institute of Advanced Engineering and Science (IAES) in collaboration with Intelektual Pustaka Media Utama (IPMU). Now, this journal is accredited by the Indonesian Ministry of Education, Culture, Research, and Technology. This journal has also just been accepted for inclusion in Scopus. Scopus is currently indexing articles from this journal.

Submit your paper now through online submission ONLY.

or

or  .

.Submit your manuscripts today!

Please do not hesitate to contact us if you require any further information at email: ijict@iaescore.com, info@iaesjournal.com

All Issues:

- 2023: Vol. 12 No. 1, Vol. 12 No. 2, Vol. 12 No. 3

- 2022: Vol. 11 No. 1, Vol. 11 No. 2, Vol. 11 No. 3

- 2021: Vol. 10 No. 1, Vol. 10 No. 2, Vol. 10 No. 3

- 2020: Vol. 9 No. 1, Vol. 9 No. 2, Vol. 9 No. 3

- 2019: Vol. 8 No. 1, Vol. 8 No. 2, Vol. 8 No. 3

- 2018: Vol. 7 No. 1, Vol. 7 No. 2, Vol. 7 No. 3

- 2017: Vol. 6 No. 1, Vol. 6 No. 2, Vol. 6 No. 3

- 2016: Vol. 5 No. 1, Vol. 5 No. 2, Vol. 5 No. 3

- 2015: Vol. 4 No. 1, Vol. 4 No. 2, Vol. 4 No. 3

- 2014: Vol. 3 No. 1, Vol. 3 No. 2, Vol. 3 No. 3

- 2013: Vol. 2 No. 1, Vol. 2 No. 2, Vol. 2 No. 3

- 2012: Vol. 1 No. 1, Vol. 1 No. 2

Announcements

International Journal of Informatics and Communication Technology has been accepted for Scopus |

|

We are pleased to announce that "International Journal of Informatics and Communication Technology, p-ISSN 2252-8776, e-ISSN 2722-2616" was accepted for inclusion in the international database "Scopus" on November 10, 2023, based on the decision of the Expert Council for content selection in (Scopus Content Selection and Advisory Board). We now invite you to share this information and submit more of your work! |

|

| Posted: 2023-11-11 | More... |

M.Eng. (S2) in Electrical Engineering (Intelligent Systems Engineering) |

|

| Want to study Master's Program of Electrical Engineering at Universitas Ahmad Dahlan, Yogyakarta? We are pleased to announce that the Indonesia Ministry of Education, Culture, Research, and Technology has approved (https://s.id/1G0RL) the establishment of the Master of Electrical Engineering in Intelligent Systems Engineering (ISE) Program at Universitas Ahmad Dahlan. In our Master in Intelligent Systems Engineering, you will gain technical expertise in computational engineering and artificial intelligence—the future of engineering and problem solving. Please take a look at the attached flyer #1 (https://s.id/1G0O8) or flyer #2 (https://s.id/1G0On). |

|

| Posted: 2023-04-12 | |

| More Announcements... |

Vol 13, No 1: April 2024

Table of Contents

|

Sabeena Yasmin Hera, Mohammad Amjad

|

1-8

|

|

Shehram Sikander Khan, Akalanka Bandara Mailewa

|

9-26

|

|

Gyana Ranjana Panigrahi, Nalini Kanta Barpanda, Prabira Kumar Sethy

|

27-33

|

|

Geerish Suddul, Jean Fabrice Laurent Seguin

|

34-41

|

|

Muhammad Alwan Naufal, Abba Suganda Girsang

|

42-49

|

|

Shania Priccilia, Abba Suganda Girsang

|

50-56

|

|

Nagaki Kentarou, Fujita Satoshi

|

57-66

|

|

Deepa Das, Rajendra Kumar Khadanga, Deepak Kumar Rout

|

67-79

|

|

Nova Winda Rajagukguk, Wulan Suwarno, Adilla Anggraeni

|

80-90

|

|

Ferawaty Ferawaty, Wakky Antonio, Adilla Anggraeni

|

91-100

|

|

Narayanan Swaminathan, Murugesan Rajendiran

|

101-107

|

|

Arnold Adimabua Ojugo, Patrick Ogholuwarami Ejeh, Christopher Chukwufunaya Odiakaose, Andrew Okonji Eboka, Frances Uchechukwu Emordi

|

108-115

|

|

Debani Prasad Mishra, Nandini Agarwal, Dhruvi Shah, Surender Reddy Salkuti

|

116-122

|

|

Narmadha Ganesamoorthy, B. Sakthivel, Deivasigamani Subbramania, K. Balasubadra

|

123-130

|